The legal and financial landscape of life insurance is built upon one single, foundational document: the Insurance Proposal Form. Far from being a mere administrative checklist, this form is a high-stakes legal instrument that dictates the validity of the future contract, determines the premium structure, and serves as the primary legal defense for the insurer in the event of a claim dispute.

For legal teams supporting insurance carriers, compliance officers, and wealth management attorneys, understanding the precise legal weight and inherent risk of the Life Insurance Proposal Form is paramount. Its complexity, combined with ever-shifting regulatory requirements, makes its drafting and review a critical task.

In this authoritative guide, we will conduct an exhaustive analysis of the Insurance Proposal Form, exploring its crucial role in the underwriting process, the strict legal principles that govern its execution, the administrative burden it places on legal departments, and how advanced platforms are leveraging AI to automate compliance and mitigate significant legal exposure.

Key Takeaways:

-

The Insurance Proposal Form is a high-stakes legal offer that becomes the foundational, legally-binding document used to judge the validity of the entire life insurance contract.

-

All insurance contracts are governed by the principle of Uberrimae Fidei (Utmost Good Faith), which legally mandates the applicant's full disclosure of every material fact in the proposal.

-

Errors, non-disclosures, or misrepresentations in the proposal form are the insurer's primary legal grounds for investigating and potentially voiding the policy during the two-year Contestability Period.

-

Manually managing state-specific regulatory compliance and version control for the proposal form creates an unacceptable level of administrative burden and legal risk for high-volume carriers.

-

AI-powered collaborative platforms like Wansom automate dynamic clause generation and audit trails, drastically mitigating the compliance and legal exposure inherent in traditional proposal drafting.

What is an Insurance Proposal Form?

The Insurance Proposal Form is the definitive, mandatory legal document used by an applicant to formally apply for an insurance policy. It serves as the applicant's legal offer to enter into a contract with the insurance carrier. This form is the basis upon which the carrier performs its risk assessment, known as underwriting, and decides whether to accept the risk, and if so, at what price (premium).

Its legal significance is profound because it initiates the doctrine of Uberrimae Fidei (utmost good faith). By signing the proposal, the applicant warrants that all information provided—covering health, financial status, and lifestyle—is true and complete to the best of their knowledge. If the insurer agrees to the terms and issues of the policy, the completed and signed proposal form is permanently incorporated as a legal part of the final insurance contract. Any material misstatement or omission within this form, which would have changed the insurer's decision, provides grounds for the insurer to later challenge or void the policy during the Contestability Period. Therefore, the proposal is the most important legal instrument for establishing policy validity and determining future claim defensibility.

Related Template: Insurance Proposal Form: Customize and Download Instantly with Wansom.ai

What Are the High-Stakes Sections of the Proposal Form?

In contract law, an offer must be clear, unambiguous, and communicated. The Insurance Proposal Form serves as the prospective insured’s formal offer to enter into a contract with the insurance company. The information contained within it is taken as a solemn declaration, forming the basis of the future policy.

1. Form vs. Policy: Understanding the Offer and Acceptance

The distinction between the Insurance Proposal Form and the resulting policy is fundamental to legal analysis:

-

The Proposal Form (The Offer): This document details the prospective insured’s information and declares their desire for specific coverage (the Sum Assured). The act of submitting the completed form, often accompanied by the first premium payment, constitutes the formal offer.

-

The Insurance Policy (The Acceptance): This is the final, executed contract. It represents the insurer’s acceptance of the proposer's offer, sometimes with modifications (e.g., charging a higher premium or excluding certain risks) based on their risk assessment (the underwriting process).

Critically, once the policy is issued, the proposal form is legally deemed part of the contract. This incorporation means any statement within the form—even those seemingly minor—can be cross-referenced against a later claim. The law views the entirety of the form as the foundational representation upon which the insurer relied when accepting the risk.

2. Key Sections of a Life Insurance Proposal:

For legal and compliance professionals, managing the Life Insurance Proposal Form requires granular attention to its constituent parts, each serving a unique legal or actuarial purpose.

i. Personal and Policy Details: Identifying the Parties and Scope

The initial sections define the contract’s scope and participants.

-

Identity of Proposer and Life Assured: While often the same person, clarity is required if a corporation or relative is the Proposer (the person offering to pay the premiums and enter the contract) and another person is the Life Assured (the person whose life is covered). This distinction is vital for establishing Insurable Interest (discussed below).

-

Sum Assured and Term: These details set the limits of the insurer’s financial liability. The Sum Assured (the payout amount) and the Policy Term (duration) are direct inputs into actuarial premium calculation.

-

Nomination and Beneficiary Information: This legally crucial section determines the recipient of the proceeds. Errors here can lead to costly probate disputes. The rules governing a valid Nomination are strictly defined by jurisdictional law and must be compliant from the outset.

ii. Financial and Occupational Information: Assessing Risk Exposure

Insurance is predicated on the financial stability and risk profile of the insured.

-

Occupation: The occupation directly influences the premium. A high-risk profession (e.g., deep-sea fishing, pilot) represents a higher mortality risk than a low-risk office environment. The proposer must disclose the exact nature of their duties, not just a broad job title.

-

Income and Financial Standing: This information, particularly important for high-value policies, is used to justify the Sum Assured. Insurers must ensure the policy is commensurate with the applicant’s financial need (a concept known as Financial Underwriting), preventing illegal speculative insurance contracts.

-

Existing Insurance and Declined Proposals: Disclosure of existing coverage (especially disability or critical illness policies) helps prevent over-insurance. Disclosure of previously declined proposals provides underwriters with necessary context regarding undisclosed risks.

Medical and Lifestyle History: The Foundation of Risk Assessment

This is the most scrutinized and legally sensitive section, designed to prevent Adverse Selection—the tendency of those in poor health to seek more coverage.

-

Current Health Status: Requires detailed answers on present illnesses, treatment, and medications.

-

Past Medical History: Must account for serious ailments, surgeries, or hospitalizations over a specified look-back period (often 5 to 10 years).

-

Family Health History: Details on major hereditary diseases (e.g., certain cancers, heart conditions) within immediate family members, which informs genetic risk modeling.

-

Lifestyle Habits: Critical questions regarding smoking status, alcohol consumption, high-risk hobbies (e.g., mountaineering), and travel to volatile regions.

The Declaration and Signature: The Point of Legal Vulnerability

The final section transforms the document from an informational sheet into a binding legal representation.

-

The Affirmation: The proposer affirms that all statements are true and complete to the best of their knowledge and belief.

-

Consent Clauses: Often includes clauses granting the insurer permission to access medical records (HIPAA Authorization) and to share information with reinsurers or mortality databases.

-

Signature: The signature legally binds the proposer to the declarations, establishing the document as the basis of the insurance contract legal basis.

Why is the Proposal Form Non-Negotiable?

The fundamental necessity of the Life Insurance Proposal Form is rooted in two bedrock principles of insurance law and economics: the doctrine of uberrimae fidei and the challenge of asymmetric information.

1. The Doctrine of Utmost Good Faith (Uberrimae Fidei )

Unlike most commercial contracts, where the principle is caveat emptor (let the buyer beware), insurance contracts are contracts of uberrimae fidei. This doctrine mandates that the applicant has an affirmative duty to disclose every material fact that they know, or ought to know, and which may influence the insurer's decision.

This is a higher standard of disclosure than in general contract law. The Proposal Form is the instrument that satisfies this legal mandate. By signing the declaration, the proposer affirms they have acted in utmost good faith. Failure to do so—even if unintentional—can breach this essential legal principle.

2. The Problem of Asymmetric Information in Insurance Law

Asymmetric information occurs when one party to a transaction (the applicant) holds crucial information that the other party (the insurer) does not. In life insurance, the applicant knows their true health status and personal habits better than anyone else.

If insurers simply took every applicant at face value, two market failures would occur:

-

Moral Hazard: Applicants might engage in riskier behavior after obtaining the policy, knowing the payout is secured.

-

Adverse Selection: Individuals with known, high-risk health conditions would be the most eager to purchase insurance, disproportionately increasing the risk pool and potentially collapsing the entire actuarial model.

The Insurance Proposal Form is the underwriter’s defense against adverse selection. It forces the disclosure of material facts, allowing the insurer to accurately perform the underwriting process life insurance requires and set a fair premium for the actual risk level, thus maintaining the financial solvency of the risk pool.

3. Financial Justification: Insurable Interest and Sum Assured

A life insurance contract is not valid unless the proposer possesses insurable interest in the life of the person being insured. This is a critical legal requirement designed to prevent betting or speculative contracts on human life.

The proposal form addresses this by asking about the relationship between the proposer and the life assured, and by requiring detailed financial information.

-

Establishing Interest: A spouse has an interest in their partner, and a business has an interest in a key executive. The form legally documents this relationship.

-

Proportionality: The insurer analyzes the requested Sum Assured against the proposer’s income, net worth, and liabilities. If a low-income individual applies for a vastly disproportionate death benefit (e.g., $10 million), the underwriter must legally flag it as potential speculation, fraud, or a money-laundering risk. The form provides the data for this essential due diligence.

The Legal Side of Insurance Proposal Form

The most significant legal risk associated with the Life Insurance Proposal Form revolves around the concept of disclosure. Mistakes in this document are not merely clerical; they are potential breaches of contract that can invalidate a policy when the beneficiaries need it most.

1. Defining Material Misrepresentation: The Legal Standard

Material misrepresentation in insurance is the act of providing false information, or omitting a fact, that would have changed the insurer’s decision to issue the policy or the terms on which it was issued.

The burden of proof often lies with the insurer, who must typically demonstrate three things:

-

Falsity: The statement made in the proposal form was, in fact, untrue.

-

Materiality: The correct information would have significantly influenced the insurer’s decision (e.g., they would have declined the policy or charged a much higher premium).

-

Reliance: The insurer genuinely relied on the false information when issuing the policy.

The critical term here is material. If an applicant incorrectly stated their weight by two pounds, it is false but likely not material. However, if they failed to disclose a diagnosis of chronic heart failure three months prior, it is highly material and provides grounds for the insurer to void the contract ab initio (from the beginning).

2. The Contestability Period: The Legal Window of Vulnerability

Nearly all life insurance policies include a Contestability Period, typically lasting the first two years after the policy is issued. This period is the legal window during which the insurer may investigate and challenge the validity of the policy based on alleged misstatements in the Insurance Proposal Form.

-

Death During the Period: If the insured dies during this two-year window, the insurer is legally entitled—and often required—to conduct a full investigation into the representations made in the proposal form. They will scrutinize medical records, pharmacy databases, and lifestyle claims.

-

Post-Contestability: After the period expires, most policies become legally incontestable. At this point, the insurer generally loses the right to challenge the policy’s validity based on prior misrepresentations (exceptions often exist for outright fraud).

For legal teams managing high-value policies, the integrity of the proposal form is the primary focus during this two-year period, as it represents the highest point of legal vulnerability for the policy’s payout.

3. Implications for Legal Teams: Vetting the Initial Proposal

Attorneys specializing in estate planning, trusts, and corporate succession often advise their clients on life insurance purchases. Their due diligence must extend to vetting the proposal itself, not just the policy terms.

-

Disclosure Review: Legal counsel should work with the client to systematically review every declaration in the proposal form, cross-referencing against available medical and financial records to ensure absolute accuracy.

-

Proposer Identity: Confirming that the Proposer has a legally recognized Insurable Interest in the Life Assured prevents a future challenge based on lack of legal standing.

-

Documentation Integrity: Ensuring that the final, signed version of the form is legally executable and that all requisite jurisdictional disclosures were attached is a compliance mandate that cannot be outsourced to non-legal staff. The risk of the client's family facing a claim denial is too great.

What Legal Professionals Need to Know about Insurance Proposal Form

The legal complexities of the Insurance Proposal Form translate directly into massive administrative and compliance overhead for the legal departments tasked with its creation, maintenance, and deployment. This is the operational bottleneck that requires a strategic technological solution.

1. Jurisdiction and Regulatory Compliance Challenges

For carriers operating across multiple states or international borders, the creation and management of the master Insurance Proposal Form template is a Sisyphean task.

-

State-Specific Amendments: Contestability period lengths, required disclosure language, and anti-fraud warnings are often mandated at the state level. A carrier must maintain dozens, if not hundreds, of slightly modified, yet legally distinct, master forms.

-

Data Privacy (HIPAA/GDPR): The medical and financial data collected by the form is highly sensitive. The consent and disclosure clauses in the proposal must be perpetually updated to comply with the latest data governance mandates (such as specific language concerning patient medical record access permissions).

-

Digital Execution Compliance: As carriers shift to electronic proposals (e-Apps), the legal team must ensure that the digital signature capture process and the electronic audit trail meet the stringent legal requirements for non-repudiation and enforceability, equivalent to a physical legal document review automation process.

2. The Cost of Manual Drafting: Human Error and Version Control Risk

In traditional legal workflows, the management of proposal forms is slow, manual, and introduces unacceptable levels of risk.

-

Drafting Bottlenecks: When a new regulatory rule is published, legal teams must manually update the master template, which involves legal research, drafting new clauses, internal review cycles, and final approval. This process can take weeks, leaving the business exposed to compliance risk in the interim.

-

The Version Control Nightmare: A single master document can splinter into dozens of unmanaged versions as different departments (Underwriting, Sales, Compliance) make edits. The risk of an agent mistakenly using an obsolete form—one lacking a crucial, recently mandated disclosure clause—is extremely high, and the resulting non-compliant contract could jeopardize an entire portfolio.

-

Time Allocation: Legal counsel are forced to dedicate valuable time to the repetitive, low-value work of verifying boilerplate language and managing version control, diverting resources away from strategic functions like complex litigation or new product development.

This administrative burden highlights a critical need not just for templates, but for a dynamic, intelligent system that can automatically manage the legal accuracy of high-volume documentation.

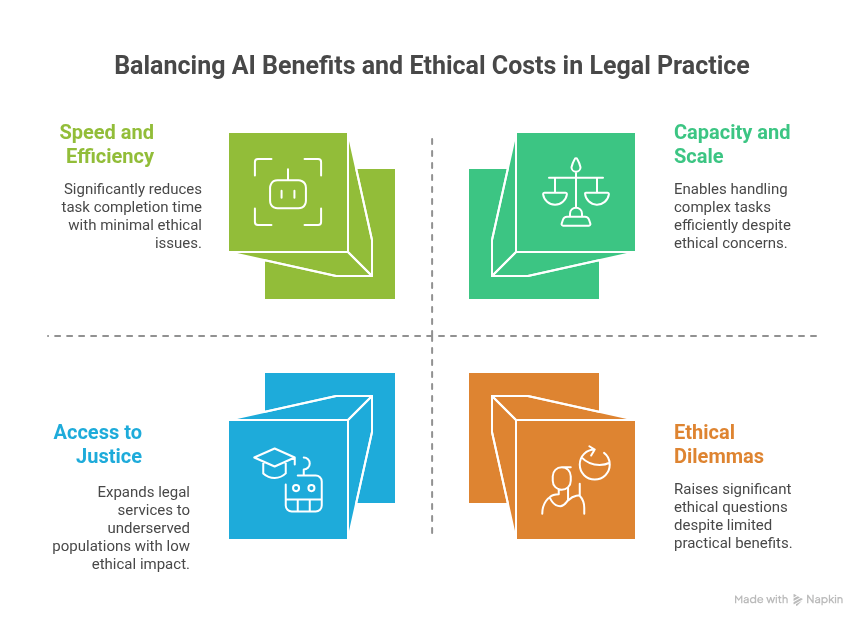

The Future of Proposal Drafting: Security and Automation with AI

The complexity, volume, and inherent legal risk associated with the Insurance Proposal Form make it an ideal candidate for strategic automation. This is the core problem Wansom, a secure, AI-powered collaborative workspace for legal teams, is designed to solve.

1. Wansom’s Approach: Automating Legal Accuracy and Compliance

Wansom transforms the creation of high-stakes documents from a static, error-prone manual process into a dynamic, compliant workflow. Our system is built to serve the legal team first, providing a secure platform to manage document intelligence.

-

Dynamic Clause Engine: The Wansom template for the Insurance Proposal Form: Customize and Download Instantly with Wansom is powered by a dynamic clause library. This engine allows the legal team to set logic-based rules: selecting "Life Insurance, Term, State: Texas" automatically generates the Texas-specific disclosure and contestability language, ensuring 100% compliance without manual intervention. This represents true AI document drafting tailored for legal precision.

-

Real-Time Regulatory Intelligence: The platform facilitates seamless integration of the latest regulatory data. When a state revises its required policy language, in-house counsel can update the master clause in Wansom, and the change is immediately propagated across all active templates, eliminating version control risk.

-

Streamlined Legal Research Integration: Wansom’s AI legal research capabilities allow legal professionals to instantly verify the statutory authority underlying a specific clause within the proposal form. Instead of leaving the drafting environment to search for case law or legislative text, the legal context is at their fingertips, accelerating the legal document review automation cycle.

2. Beyond Templates: Secure, Collaborative Document Integrity

The value of a platform like Wansom extends far beyond simple template generation; it addresses the critical need for security and collaborative integrity in handling highly sensitive documents.

-

Single Source of Truth: All legal documents, from the master Insurance Proposal Form to every executed policy, reside in a secure, central workspace. This eliminates fragmented files and ensures that every team member (Legal, Compliance, Underwriting) is working from the single, latest, legally approved version.

-

Enhanced Auditability and Non-Repudiation: For every proposal form drafted and finalized in Wansom, the system generates an immutable audit trail. This log records every edit, every reviewer, and the final electronic execution metadata. In the event of a claim dispute, the legal team has irrefutable evidence that the form used was legally compliant at the time of signing and that the declarations were properly presented and signed by the proposer.

-

Secure Data Handling: The platform is built with institutional-grade security protocols, ensuring that the sensitive medical and financial information collected during the proposal process is handled in a manner compliant with the highest data governance standards, mitigating exposure to data breach or privacy violation claims.

Conclusion

The Insurance Proposal Form is the most crucial document in the life insurance cycle. Its integrity directly impacts the solvency of the carrier and the financial security of the insured’s family. For legal teams, the manual management of these forms represents a colossal expenditure of time, a constant threat of regulatory non-compliance, and an unacceptable risk of material error that could lead to costly litigation.

Modern legal strategy demands a shift from reactive document management to proactive, secure automation. By adopting an AI-powered collaborative workspace, legal teams can ensure that every Life Insurance Proposal Form they deploy is legally accurate, instantly compliant, and defensible in any court.

If your legal team is still struggling with version control, manual compliance checks, or lengthy document review cycles, it’s time to modernize your workflow.

The complexity of the Life Insurance Proposal Form is no match for Wansom’s secure, AI-powered document drafting capabilities.

Customize and Download Wansom’s Authority-Grade Insurance Proposal Form Template Instantly to see how our platform transforms compliance and drastically reduces your drafting risk. Start building smarter, more secure legal documents today.