AI is rapidly transforming from a futuristic concept into an indispensable tool in the modern legal workflow. For law firms and in-house legal teams, systems powered by large language models (LLMs) and predictive analytics are driving efficiency gains across legal research, document drafting, contract review, and even litigation prediction. This technological shift promises to alleviate drudgery, optimize costs, and free lawyers to focus on high-value strategic counsel.

However, the powerful capabilities of AI are inseparable from serious ethical responsibilities, risks, and professional trade-offs. The legal profession operates on a foundation of trust, competence, and accountability. Introducing a technology that can make errors, perpetuate biases, or compromise client data requires proactive risk management and a commitment to professional duties that supersede technological convenience.

At Wansom, our mission is to equip legal teams with the knowledge and the secure, auditable tools necessary to navigate this new landscape, build client trust, and avoid the substantial risks associated with unregulated AI adoption.

Key Takeaways:

-

Competence demands that lawyers must always verify AI outputs against the risk of the tool fabricating legal authorities or "hallucinating."

-

Legal teams have a duty of Fairness requiring them to actively audit AI tools for inherent bias that can lead to discriminatory or unjust outcomes for clients.

-

Maintaining Client Confidentiality necessitates using only AI platforms with robust data security policies that strictly guarantee client data is not used for model training.

-

To ensure Accountability and avoid malpractice risks, law firms must implement clear human oversight and detailed record-keeping for every AI-assisted piece of legal advice.

-

Ethical adoption requires prioritizing Explainability and Transparency by ensuring clients understand when AI contributed to advice and how the resulting conclusion was reached

Why Ethical Stakes Are Real

The ethics of AI in law is not a peripheral concern; it is central to preserving the integrity of the profession and the administration of justice itself. The consequences of ethical missteps are severe and multifaceted:

1. Client Trust and Professional Reputation

An AI-driven mistake, such as relying on a fabricated case citation can instantly shatter client trust. The resulting reputational damage can be irreparable, leading to disciplinary sanctions, loss of business, and long-term damage to the firm's standing in the legal community. Lawyers are trusted advisors, and that trust is fundamentally based on the verified accuracy and integrity of their counsel.

2. Legal Liability and Regulatory Exposure

Attorneys are bound by rigorous codes of conduct, including the American Bar Association (ABA) Model Rules of Professional Conduct. Missteps involving AI can translate directly into malpractice claims, sanctions from state bar associations, or other disciplinary actions. As regulatory bodies catch up to the technology, firms must anticipate and comply with new rules governing data use, transparency, and accountability.

3. Justice, Fairness, and Access

The most profound stakes lie in the commitment to justice. If AI systems used in legal workflows (e.g., risk assessment, document review for disadvantaged litigants) inherit or amplify historical biases, they can lead to unfair or discriminatory outcomes. Furthermore, if the cost or complexity of high-quality AI tools exacerbates the resource gap between large and small firms, it can negatively impact access to competent legal representation for vulnerable parties. Ethical adoption must always consider the societal impact.

Related Blog: The Ethical Playbook: Navigating Generative AI Risks in Legal Practice

Key Ethical Challenges and Detailed Mitigation Strategies

The introduction of AI into the legal workflow activates several core ethical duties. Understanding these duties and proactively developing mitigation strategies is essential for any firm moving forward.

1. Competence and the Risk of Hallucination

The lawyer’s fundamental duty of Competence (ABA Model Rule 1.1) requires not only legal knowledge and skill but also a grasp of relevant technology. Using AI does not outsource this duty; it expands it.

The Problem: Hallucinations and Outdated Law

Generative AI’s primary ethical risk is the phenomenon of hallucinations, where the tool confidently fabricates non-existent case citations, statutes, or legal principles. Relying on these outputs is a clear failure of due diligence and competence, as demonstrated by several recent court sanctions against lawyers who submitted briefs citing fake AI-generated cases. Similarly, AI models trained on static or general datasets may fail to incorporate the latest legislative changes or jurisdictional precedents, leading to outdated or incorrect advice.

Mitigation and Best Practices

-

The Human Veto and Review: AI must be treated strictly as an assistive tool, not a replacement for final legal judgment. Every AI-generated output that involves legal authority (citations, statutes, contractual language) must be subjected to thorough human review and verification against primary sources.

-

Continuous Technological Competence: Firms must implement mandatory, ongoing training for all legal professionals on the specific capabilities and, critically, the limitations of the AI tools they use. This includes training on recognizing overly confident but false answers.

-

Vendor Due Diligence: Law firms must vet AI providers carefully, confirming the currency, scope, and provenance of the legal data the model uses.

2. Bias, Fairness, and Discrimination

AI tools are trained on historical data, which inherently reflects societal and systemic biases—be they racial, gender, or socioeconomic. When this biased data is used to train models for tasks like predictive analysis, risk assessment, or even recommending litigation strategies, those biases can be baked in and amplified.

The Problem: Amplified Inequity

If an AI model for criminal defense risk assessment is trained predominantly on data reflecting historically disproportionate policing, it may unfairly predict a higher risk for minority clients, thus recommending less aggressive defense strategies. This directly violates the duty of Fairness to the client and risks claims of discrimination or injustice.

Mitigation and Best Practices

-

Data Audit and Balancing: Firms should audit or, at minimum, request transparency from vendors regarding the diversity and representativeness of the training data. Where possible, internal uses should employ fairness checks on outputs before they are applied to client work.

-

Multidisciplinary Oversight: Incorporate fairness impact assessments before deploying a new tool. This requires input not just from the IT department, but also from ethics advisors and diverse members of the legal team.

-

Transparency in Input Selection: When using predictive AI, be transparent internally about the data points being fed into the model and consciously exclude data points that could introduce or perpetuate systemic bias.

3. Client Confidentiality and Data Protection

The practice of law involves handling highly sensitive, proprietary, and personal client information. This creates a critical duty to protect Client Confidentiality (ABA Model Rule 1.6) and to comply with rigorous data protection laws (e.g., GDPR, CCPA).

The Problem: Data Leakage and Unintended Training

Using generic or public-facing AI tools carries the risk that proprietary client documents or privileged data could be inadvertently submitted and then retained by the AI provider to train their next-generation models. This constitutes a profound breach of confidentiality, privilege, and data protection laws. Data processed by third-party cloud services without robust encryption and contractual safeguards is highly vulnerable to breaches.

Mitigation and Best Practices

-

Secure, Privacy-Preserving Tools: Only use AI tools, like Wansom, that offer robust, end-to-end encryption and are explicitly designed for the legal profession.

-

Vendor Contractual Guarantees: Mandate contractual provisions with AI providers that prohibit the retention, analysis, or use of client data for model training or any purpose beyond servicing the client firm. Data ownership and deletion protocols must be clearly defined.

-

Data Minimization: Implement policies that restrict the type and amount of sensitive client data that can be input into any third-party AI system.

4. Transparency and Explainability (The Black Box Problem)

If an AI tool arrives at an outcome (e.g., recommending a settlement figure or identifying a key precedent) without providing the clear, logical steps and source documents for that reasoning, it becomes a "black box."

The Problem: Eroded Trust and Accountability

A lawyer has a duty to communicate effectively and fully explain the basis for their advice. If the lawyer cannot articulate why the AI recommended a certain strategy, client trust suffers, and the lawyer fails their duty to inform. Furthermore, if the output is challenged in court, lack of explainability compromises the lawyer's ability to defend the advice and complicates the identification of accountability.

Mitigation and Best Practices

-

Prefer Auditable Tools: Choose AI platforms that provide clear, verifiable rationales for their outputs, citing the specific documents or data points used to generate the result.

-

Mandatory Documentation: Law firms must establish detailed record-keeping requirements that document which AI tool was used, how it was used, what the output was, and who on the legal team reviewed and signed off on it before it was presented to the client or court.

-

Client Disclosure: Implement a policy for disclosing to clients when and how AI contributed materially to the final advice or document, including a clear explanation of its limitations and the extent of human oversight.

5. Accountability, Liability, and Malpractice

When an AI-driven error occurs—a missed precedent, a misclassification of a privileged document, or wrong advice—the question of Accountability must be clear.

The Problem: The Blurry Line of Responsibility

The regulatory and ethical framework is still catching up. Who is ultimately responsible for an AI error? The developer? The firm? The individual lawyer who relied on the tool? Current ethical rules hold the lawyer who signs off on the work fully accountable. Over-reliance on AI without proper human oversight is a direct pathway to malpractice claims.

Mitigation and Best Practices

-

Defined Roles and Human Oversight: Clear internal policies must define the roles and responsibilities for AI usage, ensuring that a licensed attorney is designated as the "human in the loop" for every material AI-assisted task.

-

Internal Audit Trails: Utilize tools (like Wansom) that create a detailed audit trail and version control showing every human review and sign-off point.

-

Insurance Review: Firms must confirm that their professional liability insurance policies are updated to account for and cover potential errors or omissions stemming from the use of AI technology.

Related Blog: Why Wansom is the Leading AI Legal Assistant in Africa

Establishing a Robust Governance Framework

Ethical AI adoption requires more than good intentions; it demands structural governance and clear, enforced policies that integrate ethical requirements into daily operations.

1. Clear Internal Policies and Governance

A comprehensive policy manual for AI use should be mandatory. This manual must address:

-

Permitted Uses: Clearly define which AI tools can be used for which tasks (e.g., okay for summarizing, not okay for final legal advice).

-

Review Thresholds: Specify the level of human review required based on the task’s risk profile (e.g., a simple grammar check needs less review than a newly drafted complaint).

-

Prohibited Submissions: Explicitly prohibit the input of highly sensitive client data into general-purpose, non-auditable AI models.

-

Data Handling: Establish internal protocols for client data deletion and data sovereignty, ensuring compliance with global privacy regulations.

2. Mandatory Team Training

Training should be multifaceted and continuous, covering not just the mechanics of the AI tools, but the corresponding ethical risks:

-

Ethics & Risk: Focused sessions on the duty of competence, the nature of hallucinations, and the risks of confidentiality breaches.

-

Tool-Specific Limitations: Practical exercises on how to test a specific AI tool’s knowledge limits and identify its failure modes.

-

Critical Evaluation: Training junior lawyers to use AI outputs as a foundation for research, not a conclusion, thus mitigating the erosion of professional judgment.

3. Aligning with Regulatory Frameworks

Law firms must proactively align their internal policies with emerging regulatory guidance:

-

ABA Model Rules: Ensure policies adhere to Model Rule 1.1 (Competence) and the corresponding comments recognizing the need for technological competence.

-

Data Protection Laws: Integrate GDPR, CCPA, and other national/state data laws into AI usage protocols, particularly regarding cross-border data flows and client consent.

-

Bar Association Guidance: Monitor and follow any ethics opinions or guidance issued by the local and national bar associations regarding the use of generative AI in legal submissions.

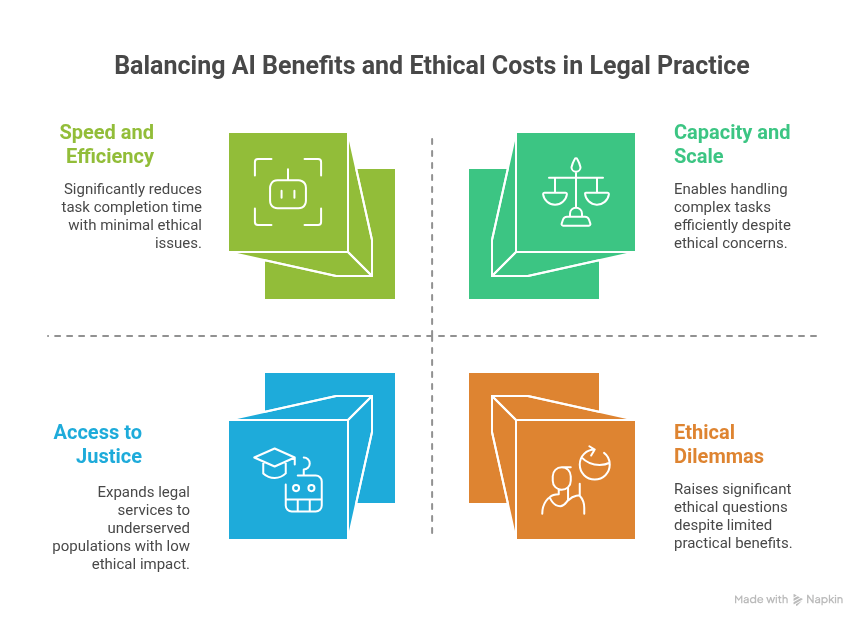

Balancing Benefits Against Ethical Costs

The move toward ethical AI is about enabling the benefits while mitigating the harms. When used responsibly, AI offers significant advantages:

The ethical strategy is to leverage AI for efficiency and scale (routine tasks, summarization, first drafts) while preserving and enhancing the human lawyer’s strategic judgment and accountability (final advice, court submissions, client counseling).

Related Blog: The Future of Legal Work: How AI Is Transforming Law

Conclusion: The Moral Imperative of Trustworthy Legal Technology

AI is a potent force that promises to reshape legal services. Its integration into the daily work of lawyers is inevitable, but its success hinges entirely on responsible, ethical adoption. For legal teams considering or already using AI, the path forward is clear and non-negotiable:

-

Prioritize Competence: Always verify AI outputs against primary legal authorities.

-

Ensure Fairness: Proactively audit tools for bias that could compromise client rights.

-

Guarantee Confidentiality: Demand secure, auditable, and privacy-preserving tools that prohibit client data retention for model training.

-

Enforce Accountability: Maintain clear human oversight and detailed record-keeping for every AI-assisted piece of work.

Choosing a secure, transparent, and collaborative AI workspace is not merely a performance enhancement; it is a moral imperative. Platforms like Wansom are designed specifically to meet the high ethical standards of the legal profession by embedding oversight checkpoints, robust encryption, and auditable workflows.

By building their operations on such foundations, law firms can embrace the power of AI without compromising their professional duties, ensuring that this new technology serves not just efficiency, but the core values of justice, competence, and client trust.

Leave a Reply